Flagship Research Project

Biologically Inspired Multimodal Retrieval using Spiking Neural Networks and FAISS

Problem

Multimodal retrieval systems typically rely on dense artificial neural networks, which lack biological plausibility and energy-efficient temporal processing. This project explores whether spiking neural networks can be used to learn aligned image–text representations for retrieval, inspired by episodic memory mechanisms in the brain.

Approach

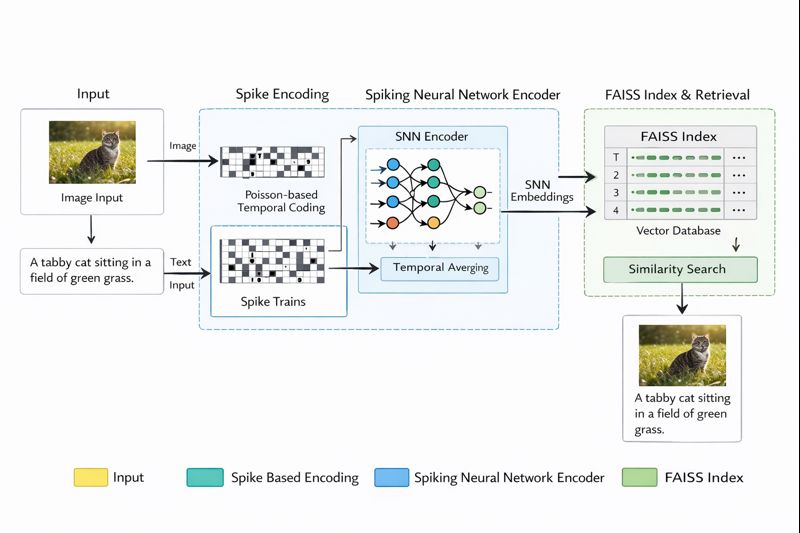

I designed an end-to-end multimodal retrieval pipeline using spiking neural networks as encoders for both image and text modalities. Inputs are converted into spike trains using Poisson encoding, and embeddings are trained using contrastive learning. Learned representations are indexed using FAISS for efficient similarity search.

System Architecture

- Image encoder and text encoder implemented using LIF-based spiking neurons

- Surrogate gradient learning for backpropagation through spikes

- Shared embedding space for cross-modal alignment

- FAISS-based nearest neighbor indexing for retrieval

Experimental Setup

- Dataset: Image–text paired dataset

- Embedding dimension: 512

- Training: Contrastive loss with stable convergence

- Evaluation metrics: Recall@1, Recall@5, Mean Reciprocal Rank (MRR)

Results

The system achieved stable training convergence and demonstrated effective cross-modal retrieval performance, validating the feasibility of spike-based multimodal representation learning.

Reproducibility

The complete codebase includes structured experiment tracking, configuration files, and evaluation scripts to ensure reproducibility.

Applied Research Project

SmartCampus Federated Learning for Face Recognition

Problem

Centralized face recognition systems raise privacy concerns when training data originates from multiple stakeholders. This project addresses the challenge of building a robust face recognition model without sharing raw facial data across clients.

Approach

I implemented a federated learning pipeline using decentralized clients and a central server for model aggregation. Each client trains locally on private facial data, while only model updates are shared. The system uses a lightweight CNN for efficient training.

System Architecture

- Federated client–server setup using Flower

- FedProx strategy to handle non-IID data

- Local training with periodic global aggregation

Experimental Setup

- Dataset: AT&T Faces dataset

- Clients: Simulated multi-client environment

- Metrics: Client-side accuracy across communication rounds

- Hardware: CPU-based training

Results

The federated system demonstrated consistent model improvement across rounds while preserving data privacy, validating the effectiveness of decentralized training for face recognition.

Reproducibility

The repository includes preprocessing scripts, training logic, and evaluation utilities for end-to-end reproducibility.

Additional implementation details, experiment logs, and results are available in the respective GitHub repositories.